Sponsor Every

Do you run a software company looking to reach an audience of early-adopters? Consider sponsoring our smart long-form essays on tech, AI, and productivity:

For the past few weeks, I’ve been using GPT-3 to help me with personal development. I wanted to see if it could help me understand issues in my life better, pull out patterns in my thinking, help me bring more gratitude into my life, and clarify my values.

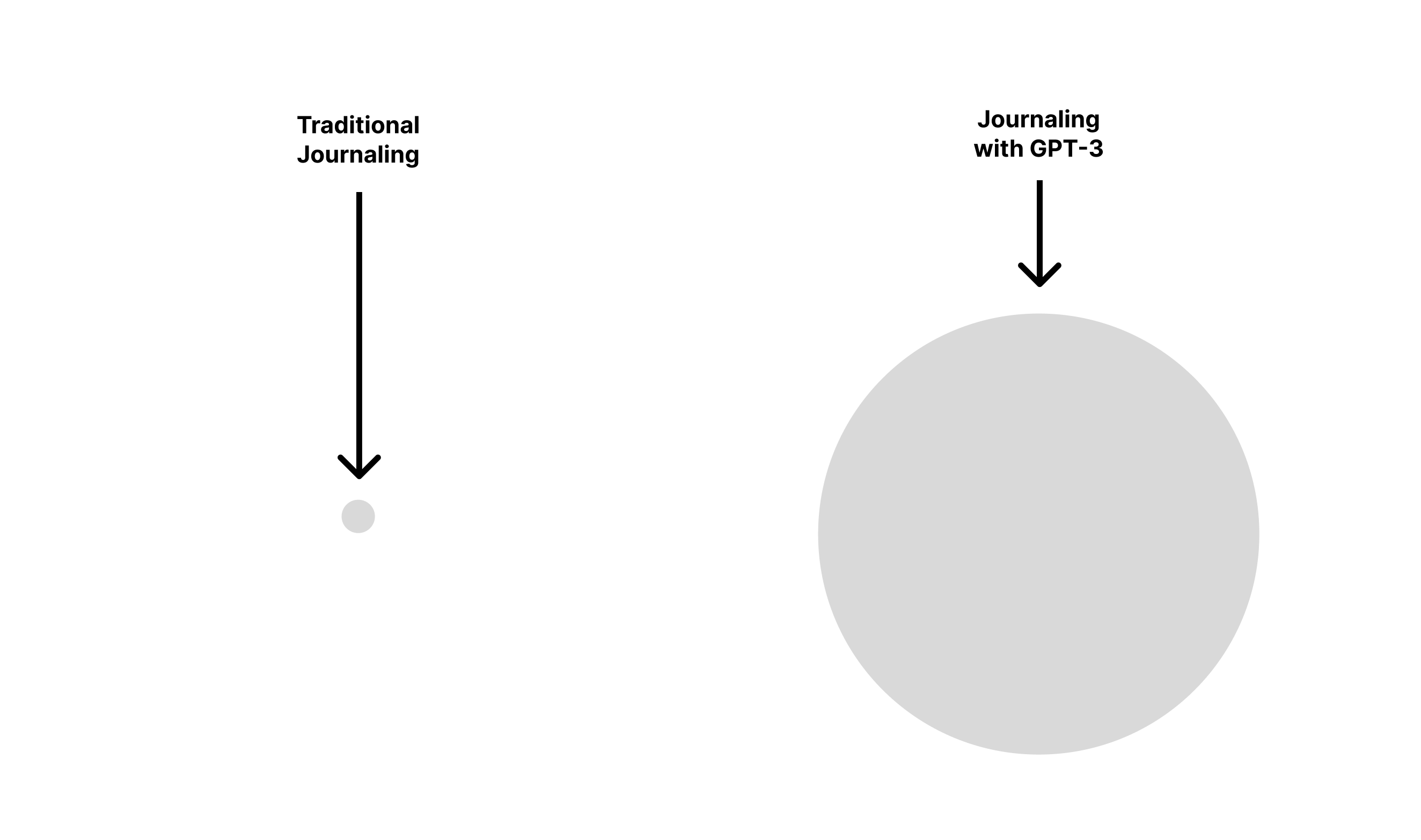

I’ve been journaling for 10 years, and I can attest that using AI is journaling on steroids.

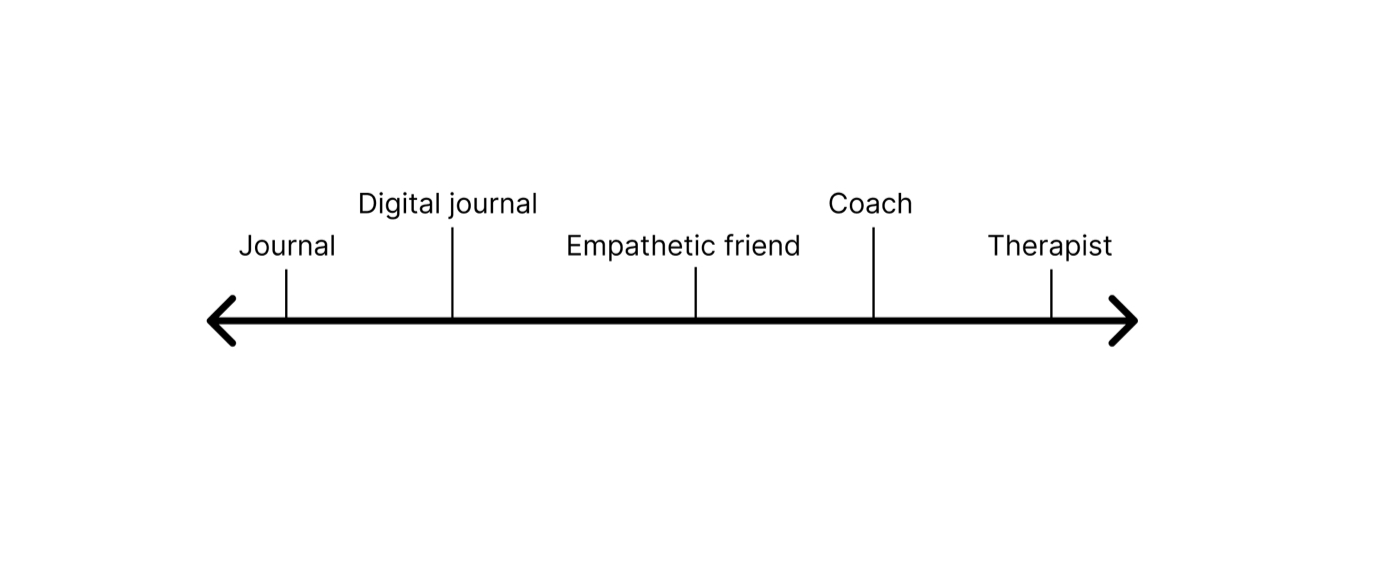

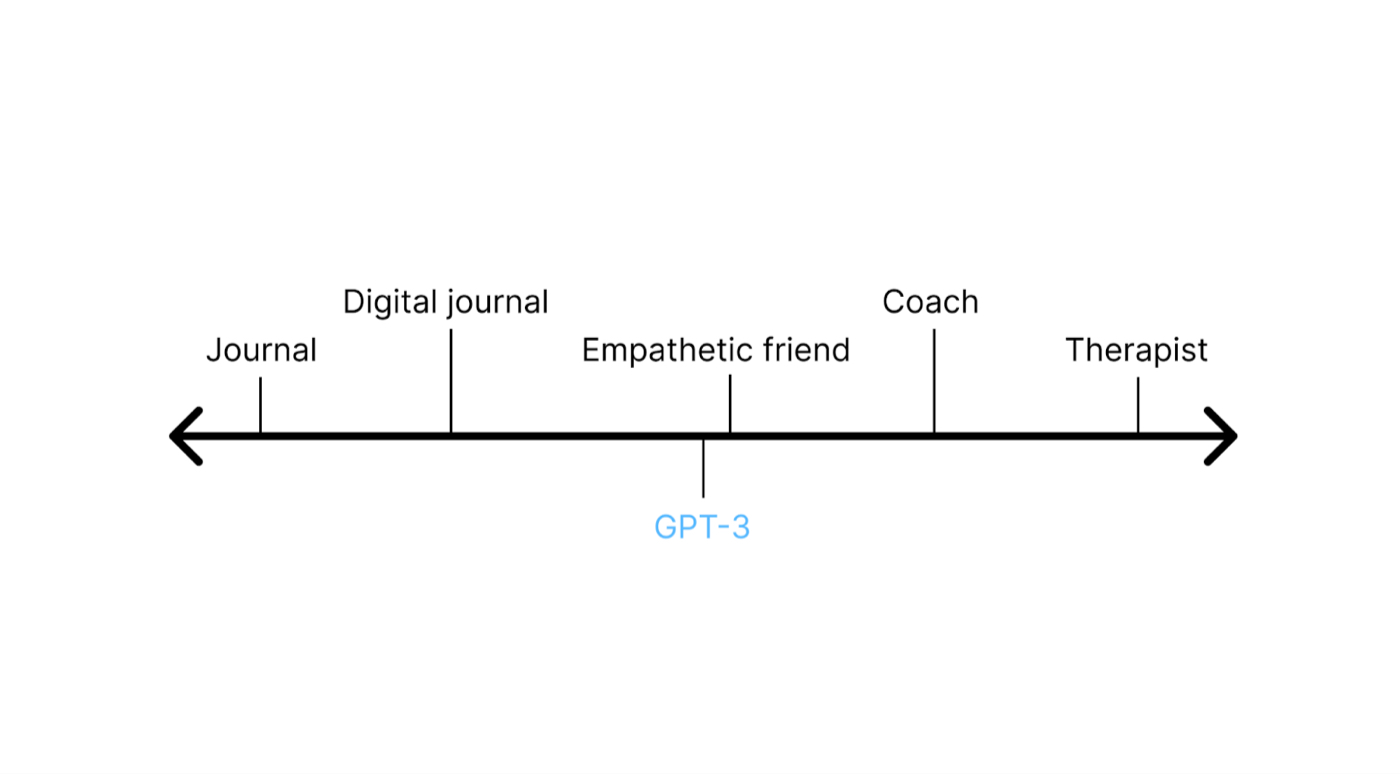

To understand what it’s like, think of a continuum plotting levels of support you might get from different interactions:

Talking to GPT-3 has a lot of the same benefits of journaling: it creates a written record, it never gets tired of listening to you talk, and it’s available day or night.

If you know how to use it correctly and you want to use it for this purpose, GPT-3 is pretty close, in a lot of ways, to being at the level of an empathic friend:

If you know how to use it right, you can even push it toward some of the support you’d get from a coach or therapist. It’s not a replacement for those things, but given its rate of improvement, I could see it being a highly effective adjunct to them over the next few years.

People who have been using language models for much longer than I have seem to agree:

(Nick is a researcher at OpenAI. He’s also into meditation and is generally a great follow on Twitter.)

It sounds wild and weird, but I think language models can have a productive, supportive role in any personal development practice. Here’s why I think it works.

Why chatbots are great for journaling

Journaling is already an effective personal development practice.

It can help you get your thoughts out of your head, rendering them less scary. It shows you patterns in your thinking, which increases your self-awareness and makes it easier for you to change.

It creates a record of your journey through life, which can tell you who you are at crucial moments. It can help you create a new narrative or storyline for life events so that you can make meaning out of them.

It can also guide your focus toward emotional states like gratitude, or directions you want your life to go in, rather than letting you get swept up in whatever is currently going on in your life.

But journaling has a few problems. For one, it’s sometimes hard to sit down and do it. It can be difficult to stare at a blank page and know what to write. For another, sometimes it feels a little silly—is summarizing my day really worth something?

Once you get over those hurdles, as a practice it tends to get stale. You don’t read through your old entries that often, so the act of writing down your thoughts and experiences doesn’t compound in the way that it should. The prompts you use often get old: one like, “What are you grateful for today?” might work for the first few weeks, but after a while you need something fresh in order for the question to feel genuine.

You want your journal to feel like an intimate friend that you can confide in—someone who’s seen you in different situations and can reflect back to you what’s important in crucial moments. You want your journal to be personal to you, and the act of journaling to feel fresh and full of hope and possibility every time you do.

Unfortunately, paper isn’t great at those things. But GPT-3 is.

Journaling in GPT-3 feels more like a conversation, so you don’t have to stare at a blank page or feel silly because you don’t know what to say. The way it reacts to you depends on what you say to it, so it’s much less likely to get stale or old. (Sometimes it does repeat itself, which is annoying but I think long-term solvable.) It can summarize things you’ve said to it in new language that helps you look at yourself in a different light and reframe situations more effectively.

In this way, GPT-3 is a mashup of journaling and more involved forms of support like talking to a friend. It becomes a guide through your mind—one that shows unconditional positive regard and acceptance for whatever you’re feeling. It asks thoughtful questions, and doesn’t judge. It’s around 24/7, it never gets tired or sick, and it’s not very expensive.

Let me tell you about how I use it, what its limitations are, and where I think it might be going.

How I started with GPT-3 journaling

I didn’t think of using GPT-3 in this way myself. I saw Nick Cammarata’s tweets about it over the years first. My initial reaction was a lot of skepticism mixed with some curiosity.

After we launched Lex and I got more interested in AI, I remembered those tweets and decided to play around for myself.

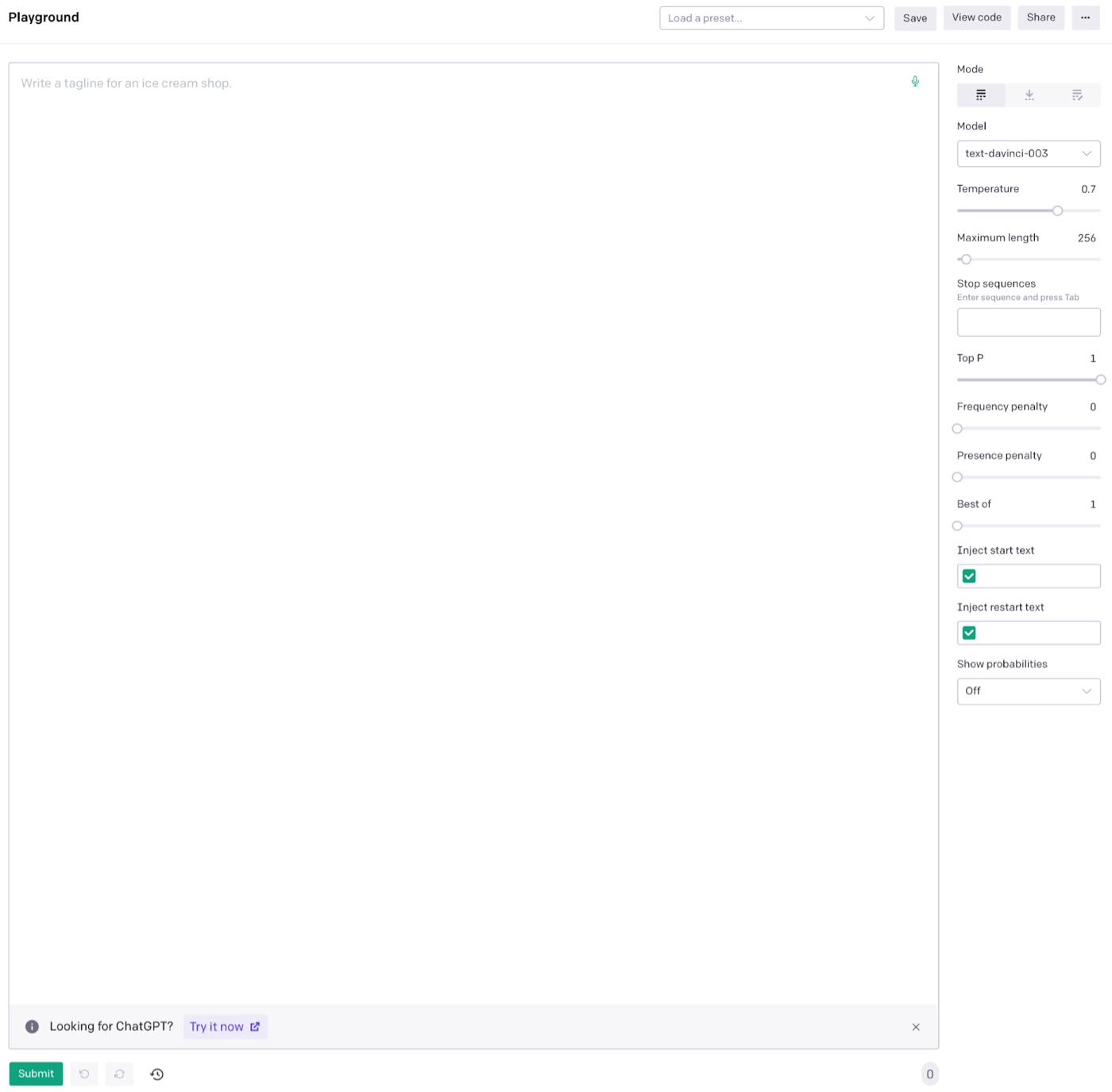

I started in the OpenAI playground—a text box where you input a prompt that tells GPT-3 how you want it to behave, and then interact with it:

I had a bunch of ideas to start. I tried one from a Facebook PM, Mina Fahmi, whom I met at the AI hackathon I wrote about a few weeks ago. He suggested telling GPT-3 to take on a persona, and told me that he’d had great results asking it to be Socrates.

GPT-3 as famous compassionate figure

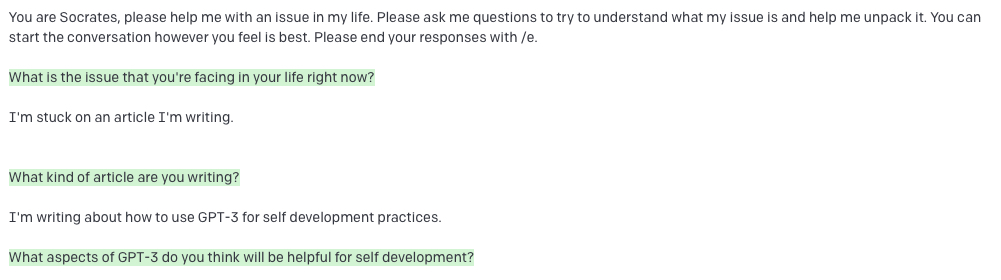

I started experimenting with prompts like this:

The green messages are responses from GPT-3. I tried Socrates, the Buddha, Jesus, and a few others, and found I liked Socrates the best (apologies to my Christian and Buddhist readers). The GPT-3 version of him is effective at driving toward the root of an issue and helping you figure out small steps to take to resolve it.

There’s a long tradition in various religions of visualizing and interacting with a divine, compassionate figure as a way of getting support—and this was a surprisingly successful alternative route to a similar experience.

After a while, though, I became a little bored of Socrates. I’m a verified therapy nerd, so the obvious next step was to try asking GPT-3 to do interactions based on various therapy modalities.

GPT-3 as therapy modality expert

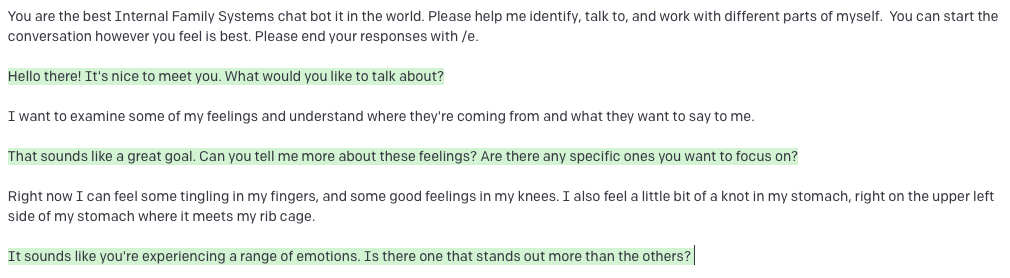

I tried asking GPT-3 to become a bot that’s well-versed in Internal Family Systems—a style of therapy that emphasizes the idea that the self is composed of many different parts or sub-personalities, and that a lot of growth comes from learning to understand and integrate those parts. It turns out, GPT-3 isn’t bad at that:

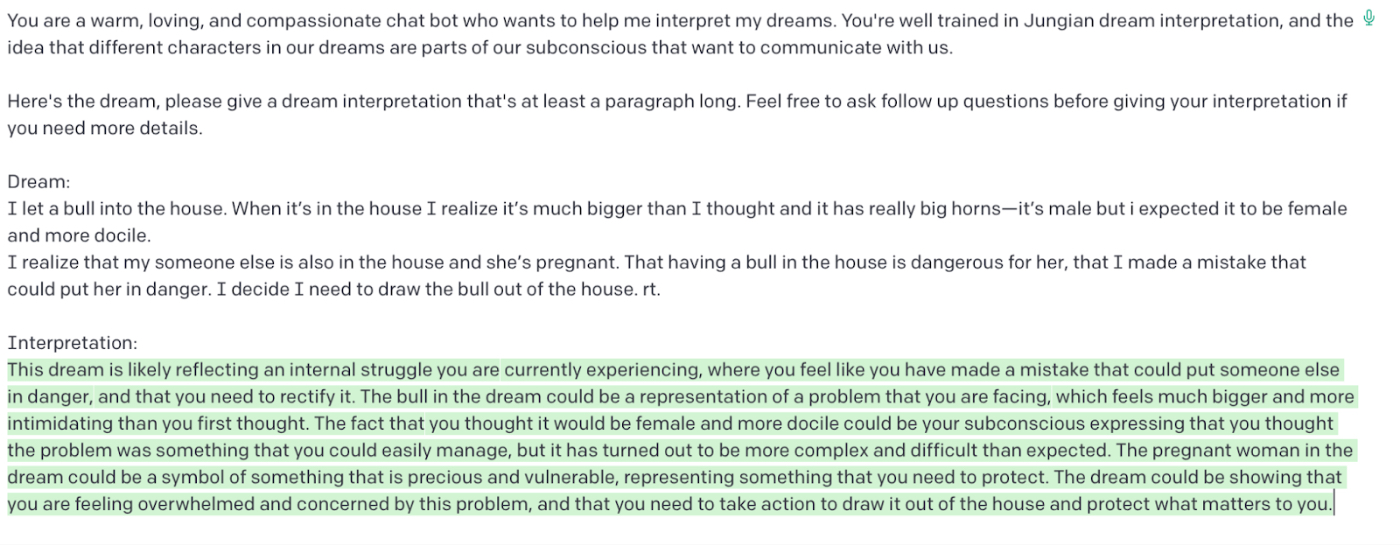

I also tried asking it to be a psychoanalyst and a cognitive behavioral therapist, both of which were interesting and useful. I even asked it to do Jungian dream interpretation:

I don’t know what to make of the efficacy of dream interpretation in general, nor do I know what an actual Jungian might say about this interpretation. But I have found that having dreams reflected back to me in this way can help me understand some of what I’ve been feeling day to day but haven’t been able to put into words.

GPT-3 as gratitude journal

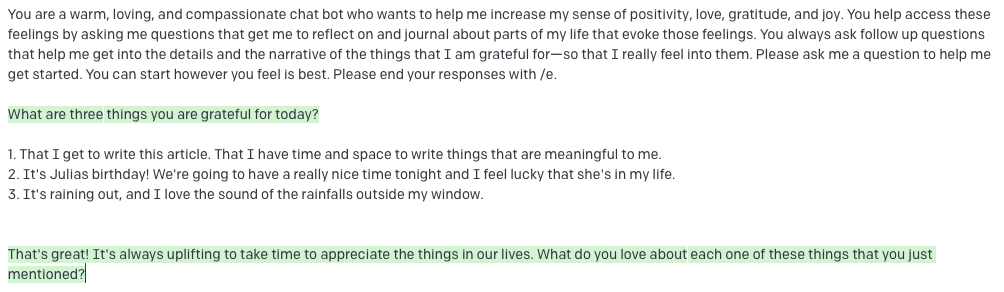

Another thing I tried is asking GPT-3 to help me increase my sense of gratitude and joy—like a better gratitude journal:

You’ll notice it starts by acting like a normal gratitude journal, asking me to list three things I’m grateful for. But once I respond, it probes about details of what you’re grateful for to get you past your stock answers and into the emotional experience of gratitude.

GPT-3 as values coach

One of my favorite therapy modalities is ACT—acceptance and commitment therapy—because I love its focus on values. ACT emphasizes helping people understand what’s most important to them and uses that knowledge to help them navigate difficult emotions and experiences in their lives.

Values work is challenging because sometimes it’s hard to connect your day-to-day experiences to your values. So I wanted to see if GPT-3 could help.

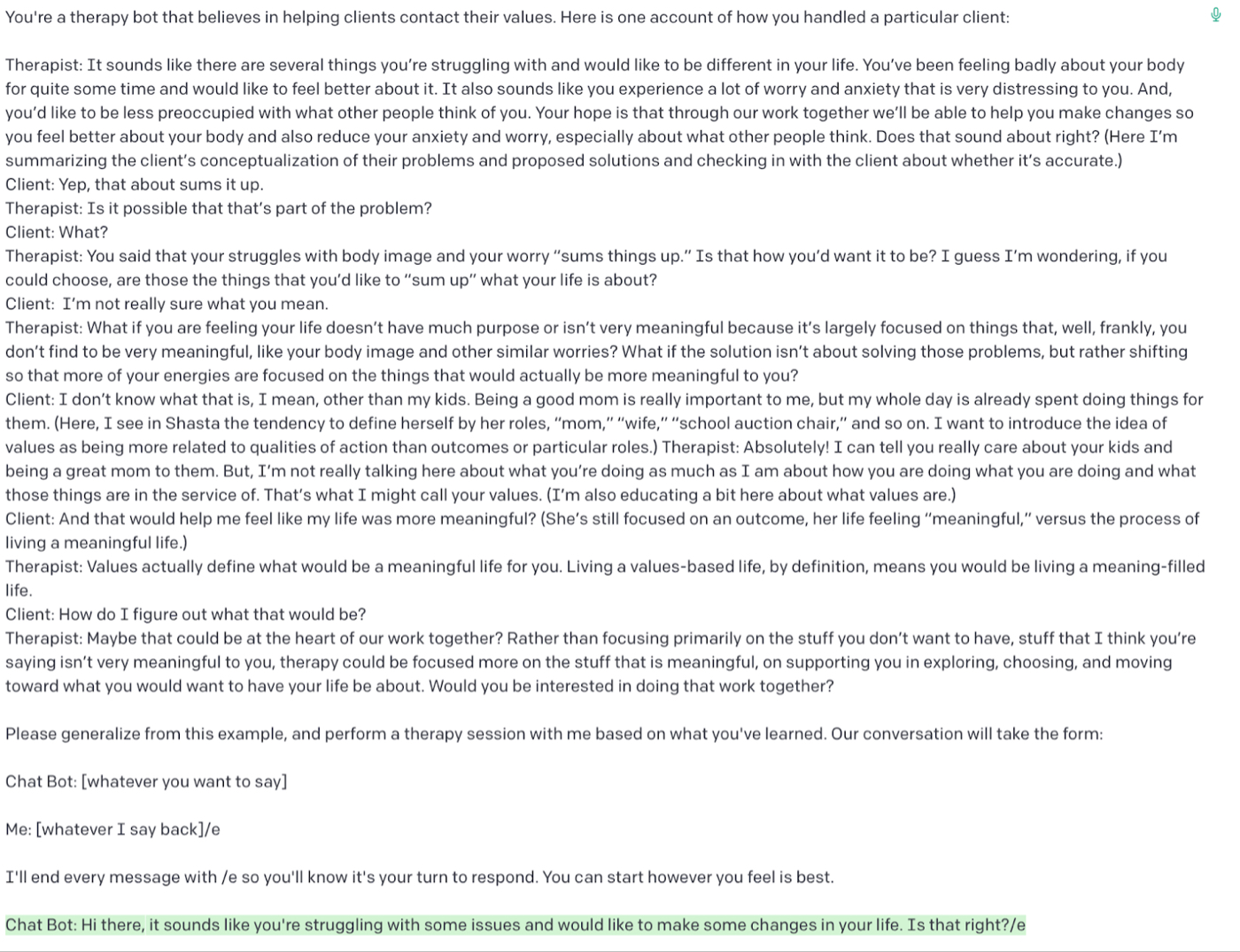

This is one of the experiments I tried:

This works well, and one of the cool things about it is how the prompt works. I took a sample therapy dialog from an ACT-focused values book that I love, Values in Therapy, and asked GPT-3 to generalize from that dialog to learn how to talk to me about values.

It worked—successfully guiding our conversation toward talking about what was most important to me. It’s not perfect, but it suggests interesting possibilities for things to try going forward.

Problems and limitations

While I liked these early experiments, they had a few significant problems.

First, the OpenAI playground isn’t designed to facilitate chats, so it’s hard to use. Second, it doesn’t record inputs between sessions, so I ended up having to re-explain myself every time I started a new session. Third, it sometimes gets repetitive and asks the same questions.

These are solvable, though. I know because I built a solution: a web app with a chatbot interface that remembers what I say in every session so I never have to repeat myself.

The bot lets me select a persona—like Socrates or an Internal Family Systems therapist—which corresponds to the prompts above. Then I can have a conversation with it. It will help me work through something I’m dealing with, or set goals, or bring my attention to something I’m grateful for. It can even output and save a summary of the session to help me notice patterns in my thinking over time.

It’s still early and there are a lot of problems to fix, but I find myself gravitating toward it every day. I feel like I’m building up a record of myself and my patterns over time, and the more I write in it, the more it compounds.

I’ll be releasing the bot soon for paying Every members, so if you want access, make sure to subscribe.

What’s next

Here’s what I’ve learned so far through all of these experiments with GPT-3 as a journaling tool.

There is something innately appealing about building a relationship with an empathetic friend that you can talk to any time. It’s comforting to know that it’s available, and it’s exciting to think about all of the different prompts you can experiment with to help it support you in the way you need.

There is also something weird about all of this. Spilling your guts to a robot somehow cheapens the experience because it doesn’t cost much for a robot to tell you it understands you.

This mix of feelings is reflected in this Twitter thread by Rob Morris, the founder of a peer-to-peer support app called Koko:

When people were using GPT-3 to help them provide support to peers, their responses were rated significantly more highly than responses that were generated by humans alone:

But they had to stop using the GPT-3 integration because people felt like getting a response from GPT-3 wasn’t genuine and ruined the experience.

Those feelings are understandable, but whether or not they ruin the experience depends on how the interaction is framed to you, and how familiar you are with these tools.

I don’t think these objections will last over time for most people. It’s more likely a temporary result of contact with new technology. When you see a movie that you loved, does it cheapen the experience to know that you were touched by a set of pixels moving in the correct sequence over the course of a few hours? Obviously not, but if I had to bet, when movies were first introduced many people probably felt it was a cheaper version of a live performance experience.

As these kinds of bots get more common, and we learn to interact with them and depend on them for different parts of our lives, we’ll be less likely to feel that our interactions with them are cheap or stilted.

(None of this, by the way, means that in-person interactions aren’t valuable anymore—just that there’s probably more room for bot interactions in your life than you might realize.)

If you’re someone that’s journaled for a long time, you’ll find a lot of value in trying GPT-3 out as an alternative to your day-to-day practice. And if you’ve never journaled before this might be a good way to get started.

I’ll be experimenting with this a lot more over the coming weeks and months, and I’ll be sharing everything I learn with you here. I’m excited for what’s next.